Transform Your Spark Performance

Unlock the full potential of your data processing with our expert Spark optimization services. We help businesses achieve faster processing times, reduced costs, and improved efficiency.

The Challenge

When dealing with big data, writing Spark code is just the beginning. However, if you don't optimize the code, the processing time can become excessively long. The following steps demonstrate the typical flow of working with big data using Spark, from writing the code to continuous processing. Proper optimization is key to improving efficiency and reducing execution time.

Our Technology Stack

Leveraging cutting-edge technologies to deliver exceptional big data solutions

Powerful Technology Stack for Big Data Solutions

We leverage industry-leading technologies to build robust, scalable, and efficient big data solutions. Our carefully chosen stack ensures optimal performance and reliability.

Apache Spark

Lightning-fast unified analytics engine for large-scale data processing

Hadoop

Distributed storage and processing of big data across clusters

Apache Kafka

High-throughput distributed streaming platform

Monitoring Tools

Comprehensive monitoring and analytics for system performance

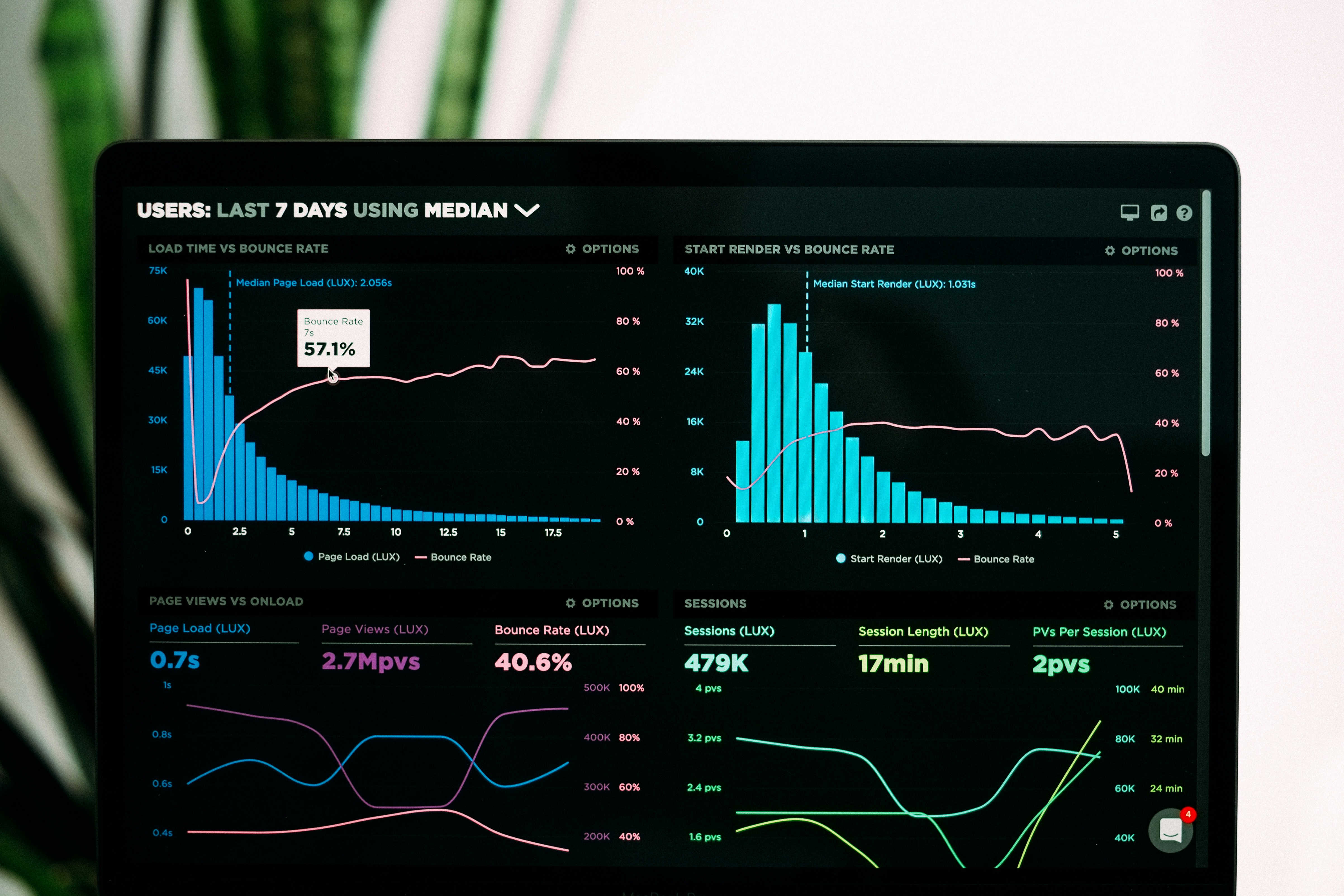

Performance Metrics

Comprehensive monitoring and analytics across your Spark infrastructure

Master Node Metrics

Worker Node Metrics

Job Metrics

Our Optimization Process

A step-by-step approach to enhance your Spark performance

- Review current Spark architecture

- Identify system bottlenecks

- Analyze resource allocation

- Document existing configurations

- Run baseline performance tests

- Capture all output data

- Record execution times

- Generate test data snapshots

- Save all output results

- Document data patterns

- Create checksum validations

- Store row counts and summaries

- Record CPU/Memory usage

- Measure processing time

- Document shuffle patterns

- Track resource costs

- Apply performance improvements

- Optimize data partitioning

- Implement caching strategy

- Tune Spark configurations

- Rerun performance tests

- Capture new output data

- Measure execution times

- Generate test reports

- Compare with baseline data

- Verify data integrity

- Validate checksums match

- Confirm row counts identical

- Compare performance metrics

- Verify output consistency

- Document improvements

- Calculate cost savings

- Generate optimization report

- Prepare implementation guide

- Document best practices

- Train team on changes

Benefits of Our Spark Optimization

Transform your data processing capabilities with our expert optimization services

Enhanced Performance

Significantly reduce job execution time and improve resource utilization through our optimized processing techniques

Cost Reduction

Optimize resource allocation and reduce infrastructure costs with efficient resource management

Enhanced Scalability

Handle larger datasets and concurrent operations efficiently with our scalable architecture

Improved Reliability

Ensure consistent performance and reduced failures with robust error handling

Advanced Monitoring

Real-time insights and comprehensive monitoring of your Spark applications

24/7 Expert Support

Round-the-clock support from our team of Spark optimization experts

10x Performance Boost for Major Retailer

How we optimized Spark jobs to reduce processing time from hours to minutes for a large-scale retail analytics platform.

Coming soon

Dynamic Real-time Risk Detection and Management

Implementing efficient Spark streaming for real-time financial risk assessment and fraud detection.

Coming soon

Predictive Maintenance with Spark ML

Leveraging Spark's machine learning capabilities to predict equipment failures and optimize maintenance schedules in manufacturing.

Coming soon

Best Practices for Spark Optimization

A comprehensive guide to optimizing your Spark applications for maximum performance and efficiency.

Coming soon

Next-Generation Solutions for Big Data Analytics

Exploring the latest trends and innovations shaping the future of big data analytics and data processing.

Coming soon

Accelerating Data Science with Spark

Discover how Spark's powerful data processing capabilities can turbocharge your data science workflows and projects.

Coming soon